Are you working with C# and want to learn more about floating point numbers? In this guide, you will learn about the differences between the data types Float, Double, and Decimal. We will look at how you can use these effectively to perform precise calculations. Let's dive into the realm of floating point numbers together.

Key takeaways

- Float, Double, and Decimal are different data types for floating point numbers in C#.

- Each data type has different precisions and memory allocations.

- The choice of the right data type depends on how precise your calculations need to be.

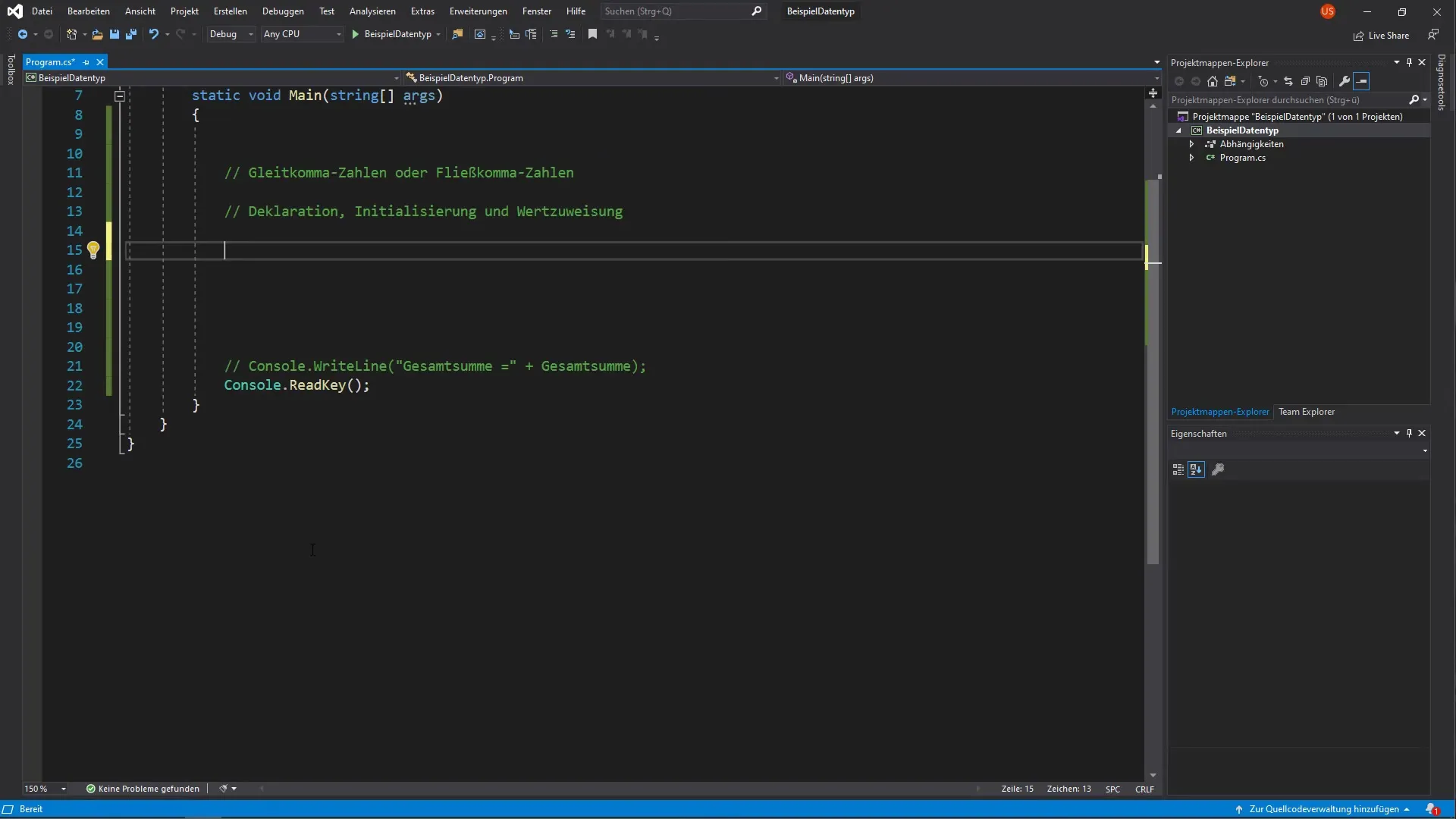

Step-by-step guide

Basics of floating point numbers

First, it is important to understand why we distinguish between integers and floating point numbers in programming. Floating point numbers (or real numbers) are necessary to represent numbers that contain fractions. The main reason you need the different types is that computers can handle integers much faster than floating point numbers.

The Double data type

Let's start with the Double data type. It is used when you need higher precision and can represent up to 16 digits. To declare a Double variable, you use the keyword double.

Note that you use a point instead of a comma! This is due to the use of the American format.

The Float data type

Now let's look at the Float data type. A Float can represent up to 7 digits. To declare a Float variable, use the keyword float and add a small f at the end of the number to indicate that it is a Float.

If you omit the f, the compiler will try to interpret the number as a Double.

The Decimal data type

Thirdly, we consider the Decimal data type. Decimal achieves the highest precision and can even represent up to 29 digits. To declare a Decimal variable, use the keyword decimal and add an m at the end.

This helps the compiler to clearly recognize your intention and interpret the number correctly.

Differences in precision and usage

The difference between Float, Double, and Decimal lies in their precision and memory allocation. A Float can represent up to 7 digits, Double up to 16, and Decimal up to 29 digits. Depending on how precise your calculations need to be, you choose the appropriate data type. If your application, for example, incorporates many mathematical formulas and variables that require high precision, Decimal is the best choice.

Examples of calculations

Now let's clarify the differences through examples. First, we'll declare a Float and perform a division.

For this, you need to ensure that you add the f. After that, you can output the result.

We’ll do something similar with Double.

Here, pay attention to use points and add a d at the end of the number if you wish.

And finally, the Decimal data type.

Here, too, you append the m so that the compiler knows you are using a Decimal number.

When you run all three calculations, you will see that they return different precision values.

Importance of precision

The choice of the correct floating point data type is crucial for the accuracy of your results in mathematical considerations. The more precise you work, the more relevant the exact usability of Float, Double, or Decimal becomes. If a calculation is performed with less accurate values, there is a risk of distorting the final result.

Summary – Floating point numbers in C

You have learned the basics and differences between the data types Float, Double, and Decimal. The choice of the right type is critical for the accuracy of your calculations. Always keep in mind how many digits you need and choose your data type accordingly.